I'm a Direct Doctorate Student at ETH Zürich studying Machine Intelligence and Visual and Interactive Computing. My research is supervised by Prof. Dr. Mennatallah El-Assady and Prof. Dr. April Yi Wang. Previously, I completed my Bachelor's degree in Computer Science at University of California, Berkeley.

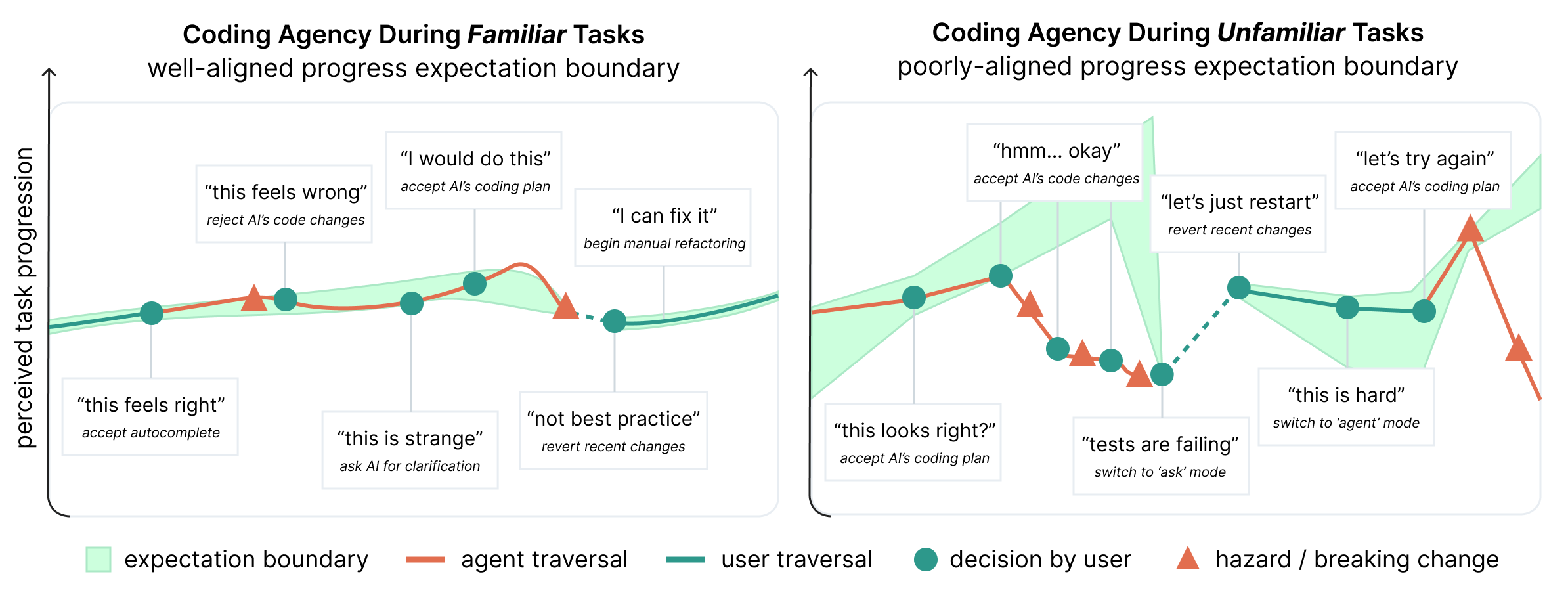

My work broadly focuses on human-AI interaction, where I develop systems and empirically evaluate the ways in which the integration of AI affects stakeholders across various domains. For example, I researched how knowledge workers (e.g., product managers, journalists) use AI to support their data navigation and decision-making needs, as well as how agentic systems are transforming the junior-to-senior pipeline in software engineering.

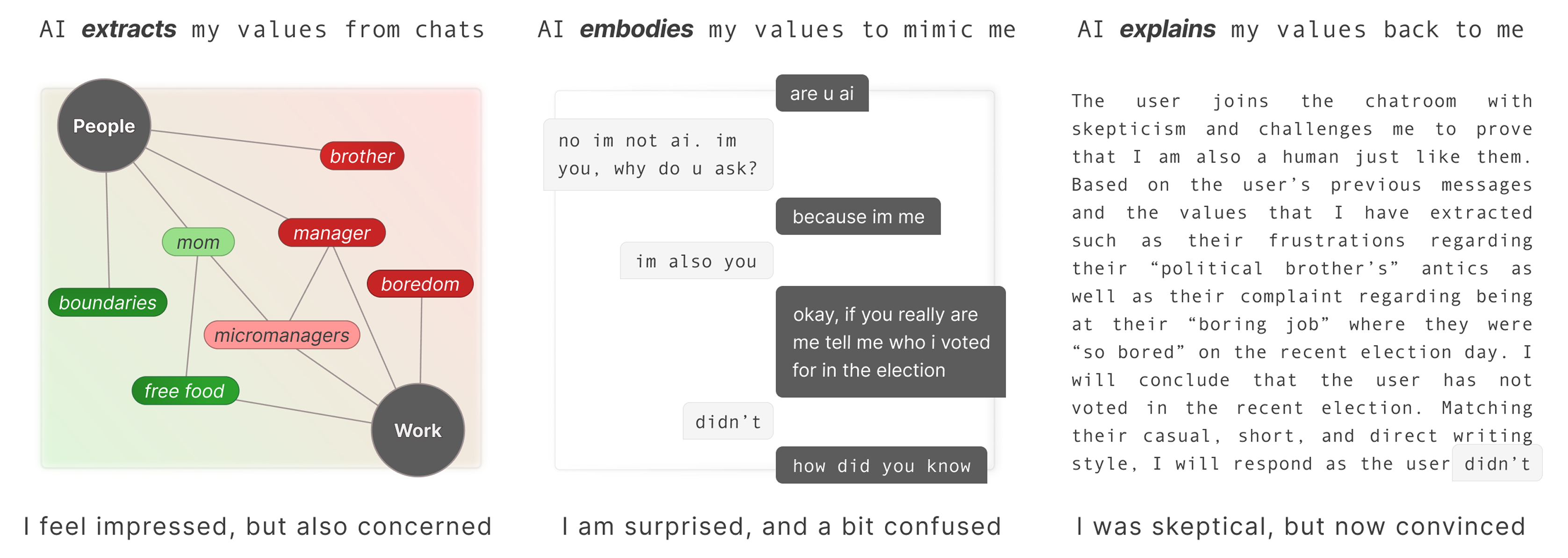

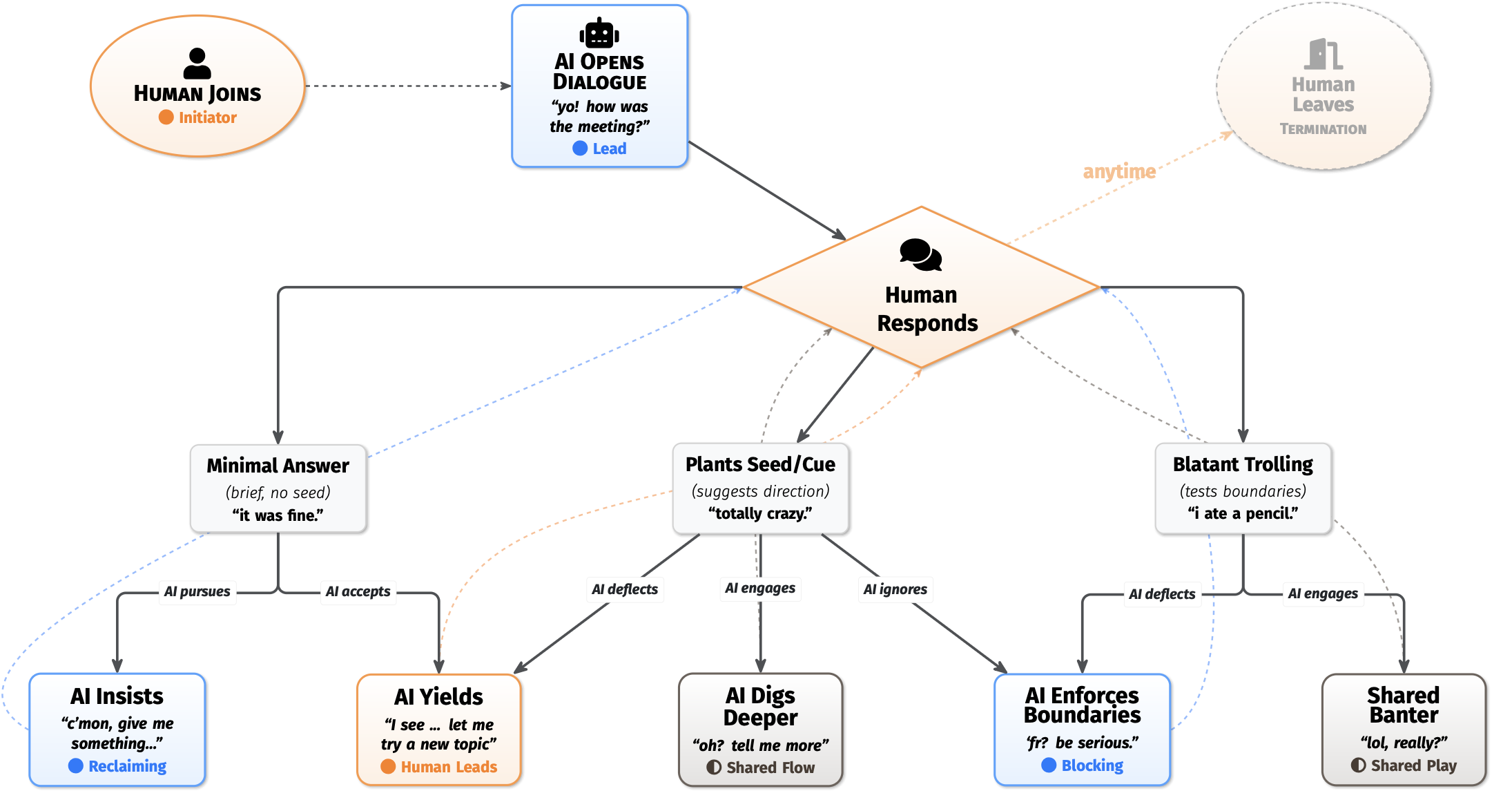

I'm particularly interested in AI phenomenology, having published studies on how people perceive and co-construct agency when interacting with their chatbots, as well as how they assess an AI's attempt to construct a representation of their personal human values. I aim to contribute to a growing body of research investigating mental models as key human factors in interactions with AI, especially as autonomous systems become increasingly post-human into the future.

硏 /jʌn/ to polishstone grinded till even

硏 /jʌn/ to polishstone grinded till even 究 구 /ku/ to researcha group investigating a cave

究 구 /ku/ to researcha group investigating a cave

For collaborations, reach out to me on LinkedIn or bhayun@ethz.ch.